The result highlights a fundamental tension: Either the rules of quantum mechanics don’t always apply, or at least one basic assumption about reality must be wrong.

The founders of quantum mechanics understood it to be deeply, profoundly weird. Albert Einstein, for one, went to his grave convinced that the theory had to be just a steppingstone to a more complete description of nature, one that would do away with the disturbing quirks of the quantum.

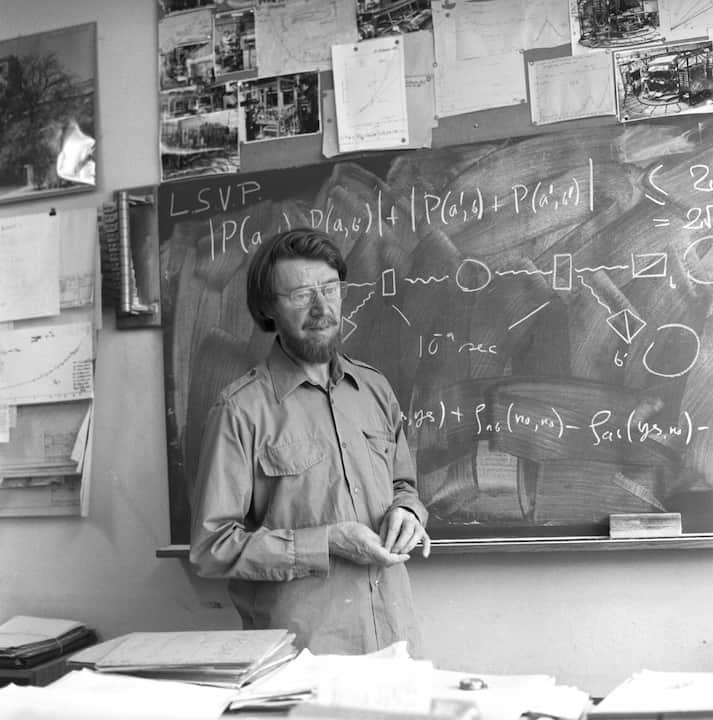

Then in 1964, John Stewart Bell proved a theorem that would test whether quantum theory was obscuring a full description of reality, as Einstein claimed. Experimenters have since used Bell’s theorem to rule out the possibility that beneath all the apparent quantum craziness — the randomness and the spooky action at a distance — is a hidden deterministic reality that obeys the laws of relativity.

Now a new theorem has taken Bell’s work a step further. The theorem makes some reasonable-sounding assumptions about physical reality. It then shows that if a certain experiment were carried out — one that is, to be fair, extravagantly complicated — the expected results according to the rules of quantum theory would force us to reject one of those assumptions.

According to Matthew Leifer, a quantum physicist at Chapman University who did not participate in the research, the new work focuses attention on a class of interpretations of quantum mechanics that until now have managed to escape serious scrutiny from similar “no-go” theorems.

Broadly speaking, these interpretations argue that quantum states reflect our own knowledge of physical reality, rather than being faithful representations of something that exists out in the world. The exemplar of this group of ideas is the Copenhagen interpretation, the textbook version of quantum theory, which is most popularly understood to suggest that particles don’t have definite properties until those properties are measured. Other Copenhagen-like quantum interpretations go even further, characterizing quantum states as subjective to each observer.

“If you’d have said to me a few years ago that you can make a no-go theorem against certain kinds of Copenhagen-ish interpretations that some people really believe in,” said Leifer, “I’d have said, ‘That’s nonsense.’” The latest theorem is, according to Leifer, “assailing the unassailable.”

Bell’s Toll

Bell’s 1964 theorem brought mathematical rigor to debates that had started with Einstein and Niels Bohr, one of the main proponents of the Copenhagen interpretation. Einstein argued for the existence of a deterministic world that lies beneath quantum theory; Bohr argued that quantum theory is complete and that the quantum world is indelibly probabilistic.

Bell’s theorem makes two explicit assumptions. One is that physical influences are “local” — they can’t travel faster than the speed of light. In addition, it assumes (à la Einstein) that there’s a hidden deterministic reality not modeled by the mathematics of quantum mechanics. A third assumption, unstated but implicit, is that experimenters have the freedom to choose their own measurement settings.

Given these assumptions, a Bell test involves two parties, Alice and Bob, who make measurements on numerous pairs of particles, one pair at a time. Each pair is entangled, so that their properties are quantum mechanically linked: If Alice measures the state of her particle, it seemingly instantly affects the state of Bob’s particle, even if the two are miles apart.

Bell’s theorem suggested an ingenious way to set up an experiment. If the correlations between Alice’s and Bob’s measurements are equal to or below a certain value, then Einstein was right: There is a local hidden reality. If the correlations are above this value (as quantum theory would predict), then one of Bell’s assumptions must be wrong, and the dream of a local hidden reality must die.

Physicists spent nearly 50 years performing increasingly exacting Bell tests. By 2015, these experiments had essentially settled the debate. The measured correlations were above the level known as Bell’s inequality, and Bell tests were consistent with the predictions of quantum mechanics. As a consequence, the idea of a local hidden reality was put to rest.

Weak Assumptions, Strong Theory

The new work draws from the tradition started by Bell, but it also relies on a slightly different experimental setup, one originally devised by the physicist Eugene Wigner.

In Wigner’s thought experiment, a person we’ll call Wigner’s friend is inside a lab. The friend measures the state of a particle that’s in a superposition (or quantum mixture) of two states, say 0 and 1. The measurement collapses the particle’s quantum state to either 0 or 1, and the outcome is recorded by the friend.

Wigner himself is outside the lab. From his perspective, the lab and his friend — assuming they are completely isolated from all environmental disturbances — continue to evolve together quantum mechanically. After all, quantum mechanics makes no claims about the size of the system to which the theory applies. In principle, it applies to elementary particles, to the sun and the moon, and to human beings.

If quantum mechanics is universally applicable, Wigner argued, then both the particle and Wigner’s friend are now entangled and in a quantum superposition, even though the friend’s measurement has ostensibly already collapsed the particle’s superposition.

The contradictions raised by Wigner’s setup highlighted fundamental and compelling questions about what qualifies as a collapse-causing measurement and whether collapse is irreversible.

As with Bell’s theorem, the authors of the new work make seemingly obvious but nonetheless rigorous assumptions. The first one states that experimenters have the freedom to choose the type of measurements they want to do. The second says that you can’t send a signal any faster than the speed of light. The third says that outcomes of measurements are absolute, objective facts for all observers.

Note that these “local friendliness” assumptions are weaker than Bell’s. The authors do not presume that there’s some kind of deterministic reality underlying the quantum world. Therefore, if an experiment can be done, and if the experiment works, that means “we’ve actually found out something even more profound about reality than from Bell’s theorem,” said Howard Wiseman, the director of the Center for Quantum Dynamics at Griffith University in Australia and one of the leaders of the new work.

The new theorem also identifies a large set of mathematical inequalities, which include but also extend beyond those formulated by Bell. “It’s possible to violate Bell inequalities but not violate our inequalities,” said team member Nora Tischler, also at Griffith.

And so, as with Bell, we can ask what the result would be if we applied the known rules of quantum mechanics to this new experimental setup. If the laws of quantum mechanics are universal, which means that they apply to both very small objects and larger ones, then experiments should violate the inequalities. If future experiments confirm this, then one of the three assumptions must be wrong, and quantum theory is even weirder than tests of Bell’s theorem show.

In fact, Tischler and her colleagues at Griffith have already carried out a proof-of-principle version of the experiment. And in doing so, they ended up violating the inequalities. But there’s a significant caveat to their experiment — one that hinges on what counts, in quantum mechanics, as an observer.

The Observer Spectrum

The new local friendliness theorem requires duplicating the Wigner’s-friend setup. Now we have two labs. At the first lab, Alice is outside, while her friend Charlie is inside. Bob is outside the other lab, and inside is his friend Debbie.

Into this Matryoshka doll setup we add a pair of entangled particles. One particle is sent to Charlie, the other to Debbie. Both observers make a measurement and record the result.

It’s now Alice and Bob’s turn. Each gets to make one of three types of measurement. The first option is simple: Just ask the friend what the outcome of the measurement is.

The other two are insanely difficult. First, Alice and Bob have to exert complete quantum control over their respective friends and labs — so much so, in fact, that they reverse the quantum evolution of the entire system. They undo the friend’s measurement, erase the friend’s memory, and restore the particle to its initial condition. (Clearly, the “friends” cannot be human; we’ll get to that in a moment.) At that point, Alice and Bob randomly choose between one of two different measurements, measure the particle, and jot down the result. They do this for tens of thousands of pairs of entangled particles.

The proof-of-principle experiment starts with a photon in each lab. Each friend is represented by a simple setup that makes a measurement on the photon, such that the photon takes one of two paths or enters into a superposition of taking both paths at once, depending on the initial quantum state of the photon. The friend can be thought of as a quantum bit, or qubit, which can be 0 (the photon has taken one path) or 1 (it’s taken the other path), or in some superposition of both. “You can think of the two paths as being the two memory states of the observer,” said Tischler. “And mathematically, this is like an observation.”

Alice and Bob can simply check to see which path the photon took (akin to asking Charlie and Debbie what they observed). Or they can erase their friends’ memories by making the two paths interfere with each other. The information about the path the photon took is wiped out, restoring the photon to its original state. Alice and Bob can then make their own measurements.

After about 90,000 such runs, the experiment clearly showed that the local friendliness theorem’s inequalities are violated.

The loophole here is obvious. Charlie and Debbie are qubits, not people. And indeed, the researchers behind the new work aren’t saying that we need to abandon any of the three assumptions just yet. “We are not claiming that [the qubit] is a real friend or a real observation,” said Wiseman. “But it allows us to verify that quantum mechanics does violate these inequalities, even though they’re harder to violate than Bell’s inequalities.”

In general, considerable debate surrounds the question of how big and complex observers have to be. Would atoms work? Viruses? Amoebas? Some physicists would argue that any system that can obtain information about the thing it’s observing and store that information is an observer. At the other end of the spectrum are those who say that only conscious humans count.

In terms of this particular experiment, the range of possible observers is extremely large. It’s already been carried out for qubits. And everyone agrees that it’s impossible to do if Charlie and Debbie are humans.

The team envisions doing the experiment at a time far in the future when the observer could be an artificial general intelligence (AGI) inside a quantum computer. Such a system could enter into a superposition of observing two different results. And because the AGI would be operating in a quantum computer, the process could be reversed, erasing the memory of the observation and returning the system to its original state.

“There are many places along the way between a single qubit and a ginormous quantum computer running an artificial intelligence, where different people will have different opinions about where along that line you could say an observation has occurred,” said Wiseman. “The theorem is a completely rigorous theorem, but it leaves open the question about what an observed event is. That’s a crucial thing.”

And after all, it took about five decades for physicists to implement fully bulletproof experimental tests of Bell’s inequality. Perhaps an AGI operating on a quantum computer is no farther away.

Let’s say for the sake of argument that such a technology will one day arrive. Then when physicists do the experiment, they’ll see one of two things.

Perhaps the inequalities won’t be violated, which will imply that quantum mechanics is not universally valid — that there’s a maximum size beyond which the rules of quantum theory simply fail to apply. Such a result would allow researchers to precisely map the boundary separating the quantum and classical worlds.

Or the inequalities will be violated, as quantum mechanics predicts. In that case, one of the three commonsense assumptions will need to be abandoned. Which leads to the question: Which one?

Extreme Relativity

The theorem makes no claims as to which assumption is wrong. However, most physicists hold two of the assumptions dear. The first — that experimenters can choose what measurements to perform — would seem inviolable.

The “locality” assumption, which forbids information from traveling faster than light, prevents all manner of absurdist awkwardness with cause and effect. (Even so, supporters of Bohmian mechanics — a theory that posits a deterministic, hidden and profoundly nonlocal reality — have abandoned this second assumption.)

This leaves the third assumption: Outcomes of measurements are absolute, objective facts for all observers. Časlav Brukner, a quantum theorist at the Institute for Quantum Optics and Quantum Information in Vienna, is emphatic about the most likely wrong assumption: “Absoluteness of observed events.”

Rejecting the absoluteness of observed events would cast doubt on the standard Copenhagen interpretation, in which measurement outcomes are considered objective facts for all observers.

What’s left? Other “Copenhagen-like” interpretations — ones that argue that outcomes of measurements are not absolute, objective facts. These include QBism (a stand-alone acronym pronounced “cubism” and originally derived from “quantum Bayesianism”) and relational quantum mechanics (RQM), which has been championed by the physicist Carlo Rovelli. QBism insists that the quantum state is subjective to each observer. RQM argues that the variables that describe the quantum world, such as the position of a particle, take on actual values only when one system interacts with another. Not only that, the value for one system is always relative to the system it’s interacting with — and is not an objective fact.

But it’s been hard for no-go theorems to distinguish between the standard Copenhagen interpretation and its variants. Now, the local friendliness theorem provides a way to at least separate them into two categories, with standard Copenhagen on one side and, say, QBism and RQM on the other.

“Here you have something that really does say something significant,” said Leifer. It “really does, in some sense, vindicate people like the QBists and the Rovellis.”

Of course, proponents of other interpretations might just claim that a violation of inequalities would invalidate one of the other two assumptions — freedom of choice or locality.

All this effort suggests that it’s time to rethink what we want from a theory, said Jeffrey Bub, a philosopher of physics at the University of Maryland, College Park who works on quantum foundations. “This attempt to kind of shoehorn quantum mechanics into a classical mold is just not the right way to go about it,” he said, referring to attempts to understand the quantum world through a classical lens. “We should try and align the way we think about what we want from a theory in terms of what quantum mechanics actually gives, without trying to say, ‘Well, it’s inadequate in some way, it’s faulty in some way.’ It may be that we’re stuck with quantum-like theories.”

In which case, taking the position that an observation is subjective and valid only for a given observer — and that there’s no “view from nowhere” of the type provided by classical physics — may be a necessary and radical first step.